Bob Newman looks at why colour balance problems arise and how they can be dealt with in-camera

Colour balance (sometimes called white balance) is a topic that can be mystifying. Generally, our eyes (or, more strictly, our visual cortex) will see colours as true almost whatever the lighting, although there are some light sources, such as yellow sodium vapour light, that will cause some colours (reds, in the case of sodium lighting) to be misrendered.

Cameras, however, seem to be much more sensitive to ambient lighting, and part of the craft of a photographer is ensuring that colours are rendered properly. Failure to do this can result in some strange photographs – skin tones in particular. In this article, I’ll discuss why the problem of white balance arises and how it is dealt with inside the camera.

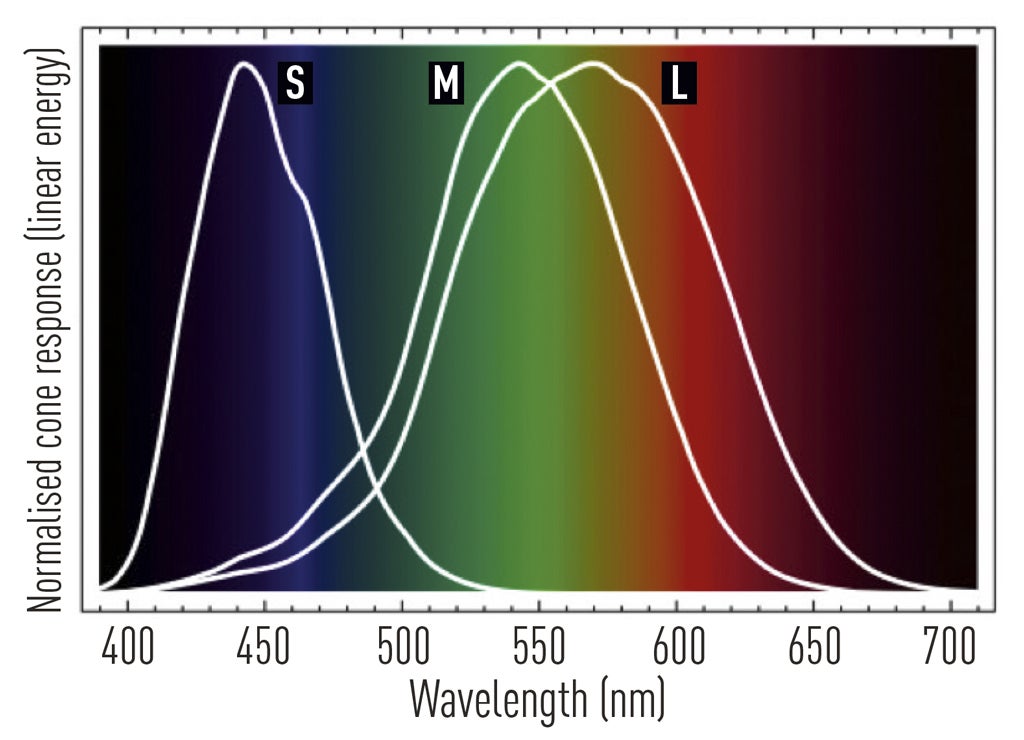

Humans detect the colour of what they see according to a tri-stimulus system. It doesn’t have to be that way: birds have a penta-stimulus system with five different types of colour detector in the eye, while most mammals have only two. We see a ‘colour’ according to the relative strengths of the signals in our three detectors (S, M and L cones). The wavelength bands of each of these three stimuli (‘red’, ‘green’ and ‘blue’, although more accurately ‘short’, ‘medium’ and ‘long’) are shown in the diagram (below).

The problem of colour balance arises because we view most objects by reflected light, and the colour of the light can change from case to case. As the colour mix of the incident light changes, so will the mix of the light reflected from the object, resulting in a different response from the cones in our eyes. This should result in us seeing different colours, but within limits, it doesn’t. This is due to the image processing done by the visual cortex of our brains. Based on a priori knowledge of what known colours should look like, the brain adjusts our perception of the colours to maintain a reasonably constant-looking image.

The photographic imaging process is designed to directly stimulate the three sets of cones – the three emitters of dyes (depending if we are looking at a light-emitting or reflective display) that colour up the pixels are designed to each stimulate one type of cone so far as is possible.

The problem is that the brain’s image processing has no context available to work out the required colour adjustments, so the reproduction chain must do this, otherwise colours will be wrongly rendered. Most natural lights are generated by something getting hot, and the temperature has a particular effect on the spectrum, according to the physical principal known as ‘black body radiation’.

So, if we know the temperature of the body that generated the light, then we can process a shot to produce the required stimuli on viewing, and it will look ‘right’ to the viewer. This adjustment is essentially correcting the relative weights of the three stimuli, so can be done completely in processing.